How Minimax-01 Achieves 1M Token Context Length with Linear Attention (MIT)

I've dig into the internal of an MIT licensed MoE system that makes use of Linear Attention (Lightning Attention) to extend it's context length to 1M input tokens.

I’ve recently dug into linear attention to understand why these attention mechanisms haven’t gained much steam compared to softmax attention (which has a quadratic algorithmic complexity).

They should theoretically be better since they allow for larger context length.

However, FlashAttention managed to get some more juice out of the quadratic softmax attention and extend the context length by sheer GPU optimization (which is great).

This is where I got into Lightning Attention, which cleverly mixes both the FlashAttention type of optimization and the linear attention type of algorithm.

A large-scale productionalization of lightning attention was recently published by Minimax as an MIT-licensed model (open-weight)!

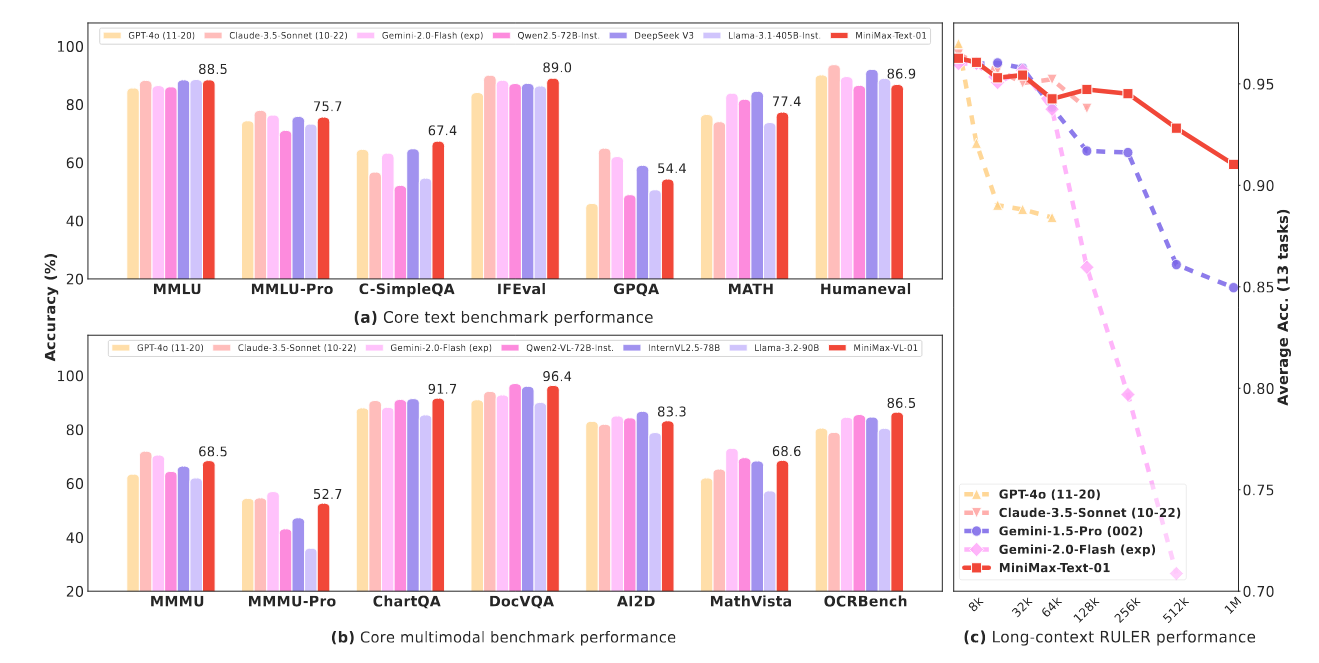

With some pretty neat results!

Let’s go through the high-level theory here and figure out how the authors put together a large-scale linear attention model.

TLDR: The Main Findings

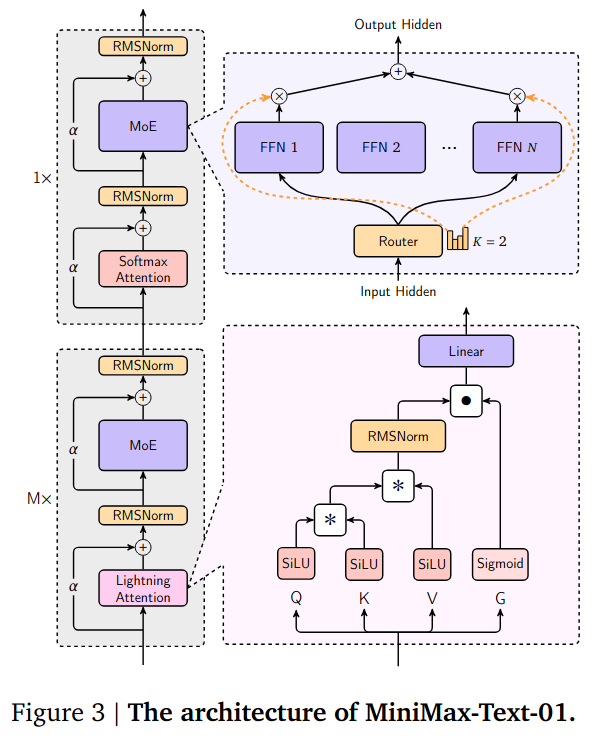

The Minimax-01 model integrates Lightning Attention with a Mixture of Experts that has it’s GPU usage optimized. Lightning Attention is hybridized with a 8:1 ratio with softmax attention.

This combination allows for:

Big Context Lengths: Handling sequences of up to 4 million tokens.

Efficient Scaling: Combining linear attention with MoE to achieve massive scale without compromising performance. A whole lot of GPU optimization had to go behind the scenes to make it happen (chapter 3 of the paper)

On-par with closed source: The model managed to be on-par with most benchmark closed-source models using softmax and excel at large context length tasks.

The model is huge, with 460 billion parameters, and they even managed to slap a vision module on top to make it multimodal.

The Problem with Softmax Attention

Most leading large transformer-based models are making use of softmax attention.

This type of attention has great performance. However, it has quadratic computational complexity with respect to the sequence length. This means that as the input sequence grows, the computational and memory requirements increase dramatically.

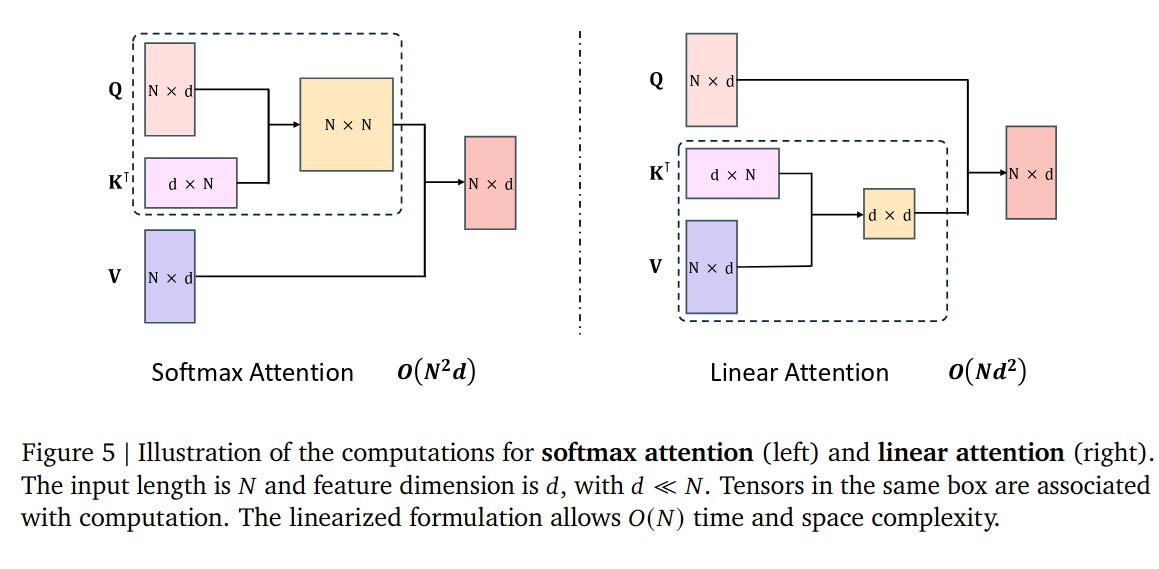

Cool illustration taken from this blog post:

This quadratic complexity becomes a severe bottleneck when dealing with long sequences, like those found in document-level tasks, long-form question answering, or high-resolution images.

Addressing the Bottleneck with FlashAttention

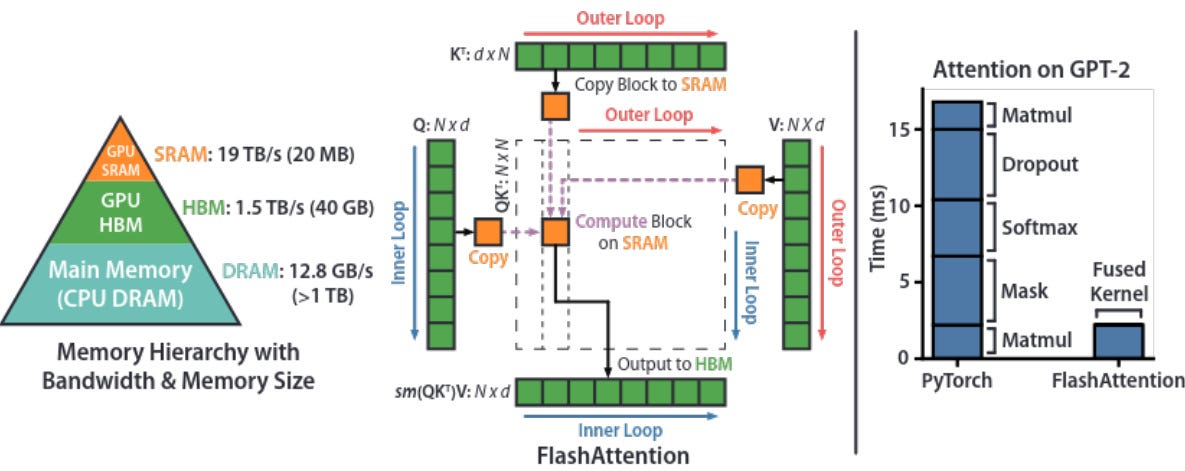

To tackle the softmax attention bottleneck, Dr. Tria Dao introduced FlashAttention in 2022, a method that aims to make attention mechanisms more efficient.

The core realization was that most of the inefficiency in softmax attention was in reading and writing to memory. Not calculating attention per se.

This means that by just doing a better job at managing the GPU, massive gains could be had with noting changing on the model side.

FlashAttention did that by:

IO-Aware Computation: It optimizes the attention computation by minimizing data movement between the GPU’s high-bandwidth memory (HBM) and on-chip SRAM, leveraging tiling techniques to reuse data efficiently.

Exact Attention Calculation: Unlike some approximate methods, FlashAttention computes the exact attention, ensuring no loss in model quality.Which was a pretty big deal, effectively a free lunch!

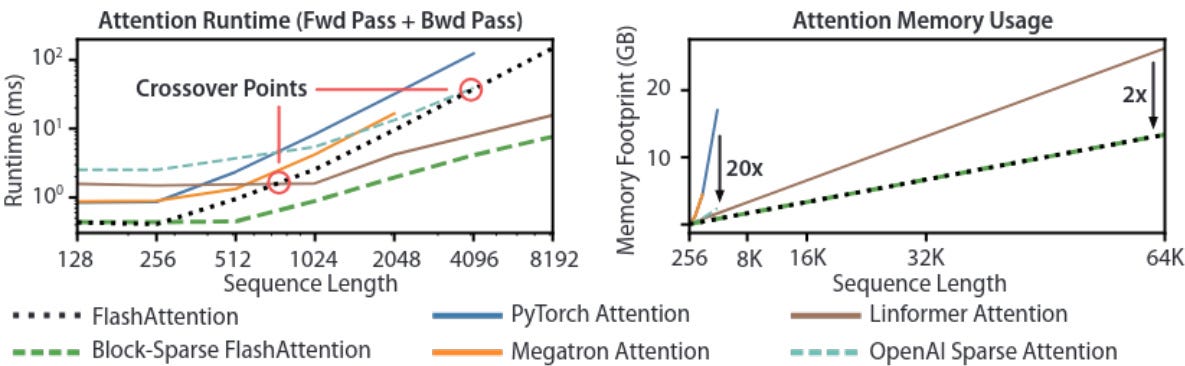

When compared to even the linear attention mechanisms, FlashAttention was able to manage larger sequence lengths with less memory footprint by being just more efficient with handling the GPU.

Main Impact:

Speed Improvements: FlashAttention significantly speeds up the attention computation, making it feasible to train models with longer sequences.

Memory Efficiency: It reduces the memory requirements, allowing for larger batch sizes and models.

This was then integrated in large models and the methods for improving attention GPU utilization were researched some more. We even got FlashAttention2.

Where Linear Attention Falls Short

Difference between softmax attention and linear attention.

While linear attention methods like Linformer and Performer offer theoretical computational advantages, they often faced challenges in practice:

Performance Gap: Linear attention models struggled to match the performance of softmax-based Transformers, particularly in tasks requiring complex reasoning or long-range dependencies. Especially after the optimization from FlashAttention!

Training Inefficiency: Some linear attention methods, especially those relying on cumulative summation (cumsum), suffer from inefficient training due to sequential dependencies, which hampers their scalability. Making their theoretical advantage essentially useless when practical GPU implementation is needed.

Combining Both with Lightning Attention

Lightning Attention addresses the shortcomings of both traditional and linear attention mechanisms.

It achieves this by doing 3 things:

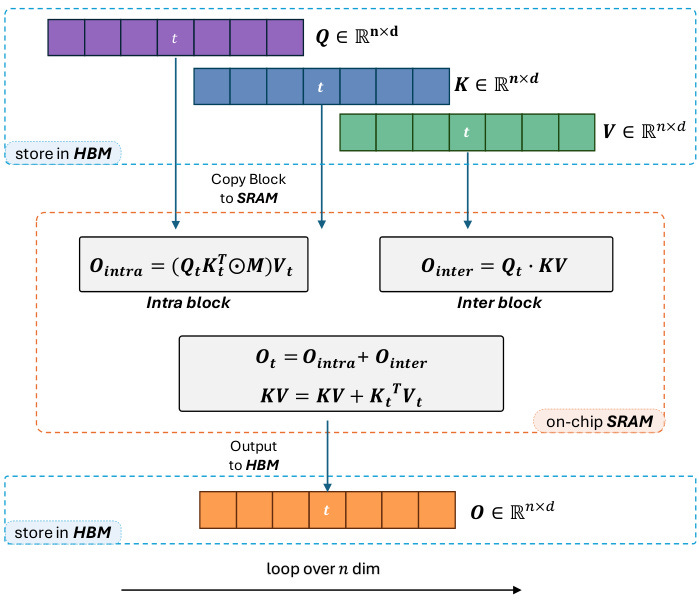

Hybrid Approach: Lightning Attention divides the attention computation into intra-block and inter-block operations. It uses conventional attention for intra-blocks and linear attention for inter-blocks, effectively combining the strengths of both approaches. It’s leveraging a divide-and-conquer methodology.

Tiling Technique: By adopting a tiling strategy similar to FlashAttention, Lightning Attention minimizes the need for cumsum operations, overcoming the training inefficiency of traditional linear attention. That’s where the intra and inter blocks come in. Do check this nice video for a refresher on that methodology.

IO-Friendly Implementation: The method is designed to be hardware-efficient, leveraging GPU parallelism and minimizing memory access overhead.

Figure 4: Lightning Attention splits the attention computation into intra-block and inter-block operations, using different strategies for each.

The Core Results of the Minimax-01 Model

The Minimax-01 paper introduces a model architecture that integrates Lightning Attention with a Mixture of Experts (MoE) to achieve scalability and good performance.

Interestingly, it’s not a full lightning attention model but rather a hybrid one. In a proportion of 1 softmax attention block for every 7 lightning attention blocks!

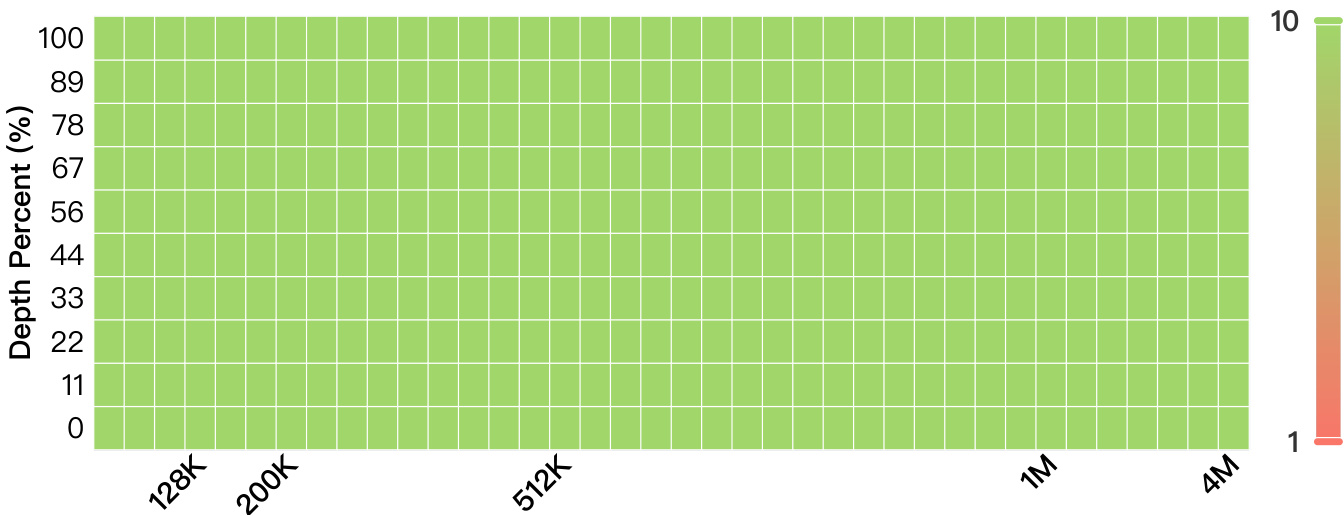

When only lightning attention is being used, the model falls short in retrieval tasks like needle-in-a-haystack.

Here are the key highlights:

Context Window Expansion: The model supports context windows of up to 1 million tokens during training and can extrapolate to 4 million tokens during inference.

Scalability: The model scales efficiently, with 456 billion total parameters and 45.9 billion activated parameters per token. However, it requires quite a recipe to get it right. The full recipe is given in sections 4 and 5 for the pre-post-training and wow.

Performance: The model matches the performance of state-of-the-art models like GPT-4o and Claude-3.5-Sonnet while having a much larger context window (20-32 times longer).

3 Key Takeaways for Transformer-Based Deep Learning

Attention Mechanism Evolution: These results demonstrate that hybrid approaches can overcome the limitations of traditional and linear attention mechanisms. Expect to see more innovations that blend different attention strategies.

Scalability and Efficiency: As models grow in size and complexity, efficient scaling solutions like MoE and linear attention will become increasingly important. These techniques will be crucial for developing models that are both powerful and sustainable. It also seems like hybridization of different techniques makes a lot of sense to strike the right scale.

Long-Context Understanding: The ability to process longer sequences might change how some applications that are making use of RAG systems are setup.

Figure 6: This NIAH figure was pretty funny, haha.

Overall, pretty cool methodology.

There seems to be something fundamental about softmax that is still required to make large language models perform, but linear attention mechanisms bring a new perspective to these models.

I wouldn’t be surprised if the new Gemini 2.5 integrated linear attention in some way!