AI Engineering is Stochastic Software Development

It's not related to ML.

I’ve been surprised by how many people think that AI engineering is related in some way to machine learning.

There is very very little in common between the two fields.

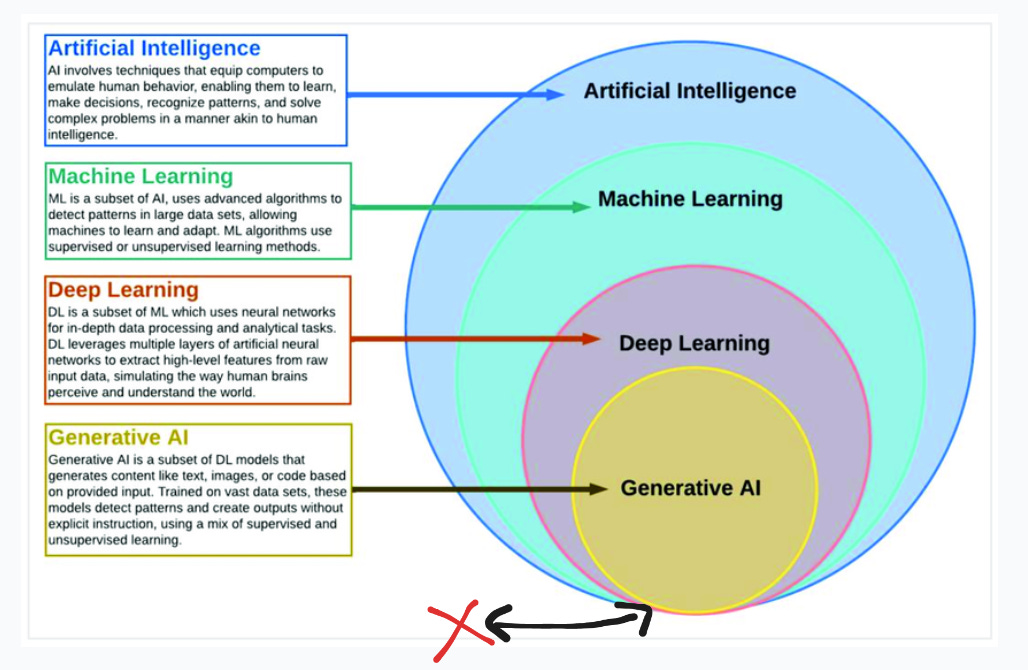

If we were to do the concentric circle diagram it would be the red X:

I’ll explain what I mean by that and why this confusion happens.

btw, if you are interested in learning more about AI engineering, like in a hands-on manner, do check out this great course by Scrimba!

Why are they not related?

They should be, at a glance, because we just showed that generative AI is within the concentric circle right under deep learning.

However, an AI engineer isn’t touching something fundamental in machine learning which is the weights of the model.

Generally, an AI engineer will modulate the input to a trained foundational model to gather the output they need.

In some rare occurrences, the AI engineer will dip their toe in the machine learning world by finetuning the model.

But the models are so big now and so complicated to train thanks to being mostly a mixture of experts that a smart AI engineer avoids this like the plague.

By the time you are done finetuning, there is a new model out there that would make your use case easier to build.

Truth is, with one or multiple foundational models you can stitch up solutions that are highly intricate and useful.

Why are they confused together?

They are confused together for three main reasons:

People who don’t know how to program see this as, rightfully, the same bucket as ML magic.

The techniques for AI engineering look from the outside so complicated that they are intuitively put in the same bucket as the rest of ML.

There is some element of “randomness” in classical machine learning systems that is equated to the tool the AI engineers are putting together.

But, when you look at the differences in the workflow the AI engineer goes through versus the machine learning engineer/scientist it’s very clear that they are at opposite ends of a research/engineering spectrum.

Machine learning starts with data, involves the its study, transformation, training of a model to generate the desired output.

It finish with a touch of engineering with the productionalization of the trained model to do the same flow with the customer.

AI engineering starts with a trained foundational model (aka big parameters), they then use (or not) the data from the customer to orchestrate a solution to generate something of value. This system is then deployed into production.

AI Engineering is Software Development of the Stochastic sort.

This is a very weird thing to say, but it does feel like that.

You are dealing with the equivalent of a black box API, in which the documentation is being studied actively and where the output changes for no apparent reason (even if the inputs stay the same).

This black box API however is very good at doing a whole lot of things since it has internet-scale knowledge of all things human.

It acts surprisingly, accepts any input, and always outputs something (no matter how ridiculous the request is).

Managing this stochasticity, this uncertainty, while wiring the input/output in a way that generates value is the core skill of an AI engineer.

Taming this stochasticity while balancing automation is currently a very difficult thing to do. Should you automate a whole workflow using these foundational AI systems or use them more as a co-pilot?

Being able to balance the value, with the risks of generation is at the core of AI engineering.

How to get good at AI engineering?

Build AI solutions. That’s it.

It would be like asking how to get good at Frontend development.

Should you start by learning the deep computer science theory underlying the DOM, browser, and networking?

Yes, at some point. But to start you should build a bunch of frontend solutions!

Good luck! 👺